TL;DR What we have in ad fraud research, is companies that are not equipped or incentivized to invest in to serious research, and media that does not know what to believe and what to disregard. Once a company grows to a certain size, it becomes almost impossible for it to keep innovating.

WHAT IS RESEARCH?

The idea that makes research powerful, is that once you have found something new, others can validate your findings. This has not been the case with the papers that were referenced the most.

There is a difference between research and reporting findings. As long as you are just researching, you could violate every principle and rule and still not cause any damage. When you report findings, any findings at all, you have a responsibility for how others are influenced by your reporting.

Whenever research findings are reported, at least the following should be present:

- methodology is explained

- known caveats are explained together with methodology

- sampling and possible violations are explained

- a theoretical background (storyline) is provided

In terms of evidence, there are two very important guidelines:

- if you claim something to be true, provide evidence for it

- if you provide evidence, make sure it’s relevant to your claim

Also I would never publish anything before I had checked it with at least a few peers. I prefer someone more academic, as they tend to be better trained for reviewing such information.

This is the absolute minimum of what constitutes research when findings are reported. If you have doubt about a paper being research, it’s probably not.

WHAT IS NOT RESEARCH?

Everything else is not research. Therefore it should be clearly labeled as marketing, to avoid confusion in the media or with decision makers.

Marketing reports should not use technical titles like “botnet” or “baseline”.

NOT RESEARCH — BOT BENCHMARK REPORT BY WHITEOPS

I had tried to connect with WhiteOps using various means and with all together three different people. Given it’s a small company, I stopped trying.

After having read carefully through the paper they had done earlier in 2015 together with the ANA, I was already sceptical about the intentions and capabilities WhiteOps has regarding anti ad fraud activity. You can find the ANA white paper here:

This paper turned out to be the single most referenced point of information on the topic of ad fraud. Especially the point it makes about the level of ad fraud exposure in the market. The issue of course is, that the level indicated by WhiteOps is far lower than I have ever witnessed from any data I’ve analysed over the past decade while looking for ad fraud exposures. Also from the storyline it became clear that WhiteOps does not have a substantial theoretical understanding of ad fraud.

Now let’s look at the DCN Bot Benchmark Report and why it is not research, but a piece of marketing collateral. You can find the report here:

A lot of work went in to this work, and real researh method had to be used in order to be able to report what is being reported here. But what is it saying? First let’s look at the Major Findings section of the report:

What had happened so far? WhiteOps had completed a hugely successful study with ANA that downplayed the problem and still was widely accepted by more or less everyone in the industry. Under closer inspection it seemed that the detection method that had been used, was one that outright missed most of ad fraud. That, or every other research on the topic of ad fraud was wrong. Nobody had ever got a number as low as WhiteOps did.

The other point related to the Major Findings section of the paper is who is paying for the paper? It is a grass-roots / tradebody organisation that represents the interest of premium digital publishers. The goal of such a body is to attract more money in to digital media from traditional channels such as TV or otherwise. It seems fair to assume that it’s principal goal for the project

What we have here is a publisher study using a bogus method to come to an artificially low number, and then compare that to another artificially low number from a previous study made by the same company. How come nobody is making the case of how dodgy this is?

The big question is on methodology that is used for detection, and for the distinction between sophisticated and not-sophisticated bot traffic. Also what does WhiteOps think about how much they are missing all together? An anti ad fraud company can’t work out of an assumption that they are detecting everything. They have to have a strong theoretical framework for understanding the various ways in which ad fraud manifests, and then be able to use that to pick the most important fights. Not only WhiteOps, but none of the other known vendors are communicating at this level.

WhiteOps comes out with aggressive marketing on its website:

Also it is completely inaccurate to say that it is the only security solution for digital ad fraud. At this point I’m not entirely sure what WhiteOps is offering can be classified as a security solution.

Both Michael Tiffany and Dan Kaminsky know that they should be more careful with what they claim in terms of credible findings. Even if their information security friends did not have good understanding of ad fraud…

There is so much more, but let’s just leave that case at that.

As I had said before, we did try to contact WhiteOps trough botlab.io on several occasions, but were not able to establish a contact. Due to this, we don’t have any other information available than what had been presented in the reports.

NOT RESEARCH — XINDI BOTNET BY PIXALATE

Regardless of many attempts, Pixalate was not able to produce any additional evidence to its claims regarding Xindi botnet. Myself together with many others have concluded that Pixalate’s claims about Xindi botnet were not just mistaken, but to some extent made up in order to make the marketing effort more effective.

Here is what some security researchers thought about Pixalate’s work on Xindi the Botnet:

It looks like that somewhere along the way Pixalate became confused about what is a botnet and what is botnet activity. Botnet research respects this difference, as otherwise botnet research could not make any sense. For example, you could not name a lot of stars with the same name. Each star needs to have its own demarkation, otherwise it could not be studied accurately (or at all). If knowing that there is certain kind of botnet activity would be enough to report a botnet, then anyone could do it.

In the case of Pixalate, they chose “impression fraud” as the kind of activity their bogus botnet Xindi is made off. They go to the extent of claiming that this is the first time such botnet, one focused on impression fraud, is identified. They also make claims to the extent as if ad fraud had worked in some other way previously. The issue with this is that

Pixalate did not just make up the technical part of their claims, also the underlying storyline is made up. Made up and contrary to what is commonly known.

To avoid using any more time with this, let’s look at what Pixalate’s disclaimer says:

If it’s just opinions, how come it is marketed so aggressively?! I’m assuming that everyone on their list (together with others) got this email on the day of the release of the report:

At the bottom of the same screen, we can see how Pixalate is in this case positioning itself as a company:

I hope this makes as little sense to you as it does to me.

We’ve now looked at two of the supposed leaders of the industry, and some of the most widely circulated and referenced reports of 2015. It does not look good for 2016 if you ask me, especially when some of the other “leading” companies do not fair any better. This includes the largest company, Integral Ad Sciences making statements about ad fraud rates going down.

NOT RESEARCH —MEDIA QUALITY REPORT BY INTEGRAL AD SCIENCE

There is a real risk in having an advertising technology company grow to a certain size. What happens is that the sales side of its business takes control of everything else, and that together with pressures to raise ever more money, make it very hard to innovate and create something new.

Why is it clear that Integral Ad Science Media Quality Report is nothing but a way for Integral Ad Science to sell more of its solution:

- not a single word about methodology

- not a single word about possible caveats

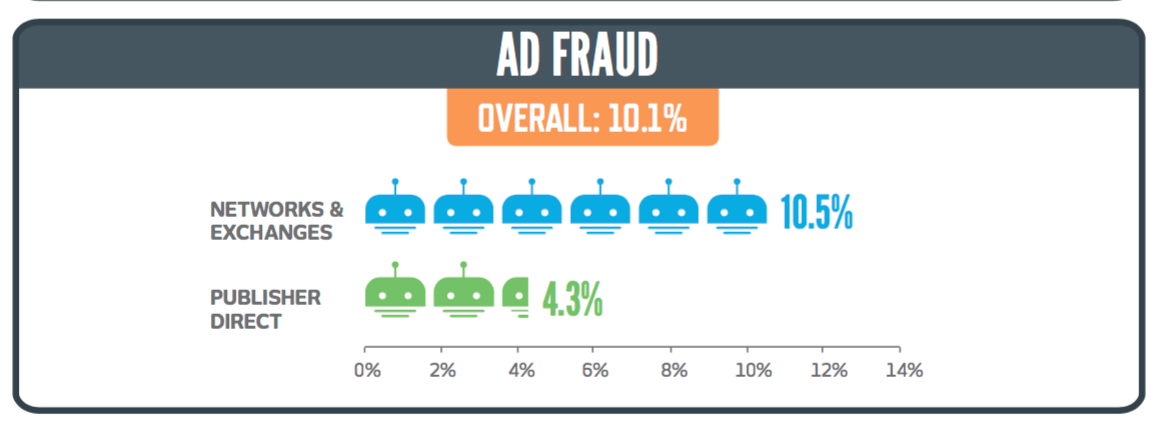

- very low overall reported rate:

By very low it is meant that findings suggest much lower rate than majority of research. The rates reported by Integral Ad Science are similar to the rates reported by WhiteOps.

Where as Pixalate showed some attempt to make it clear that their work should not be taken seriously (the disclaimer), and WhiteOps made a real effort to stand out as actual research, Integral Ad Science is doing neither.

Case closed.

EXAMPLES OF RESEARCH

These Madrid based academic researchers are talking about the the right problem, and backing it up with the right methodology and evidence.

These Bishop Fox researchers used their free time to come up with some very interesting proof of concepts relevant for ad fraud researchers.

As the last example, a blog post from Sentrant, a Halifax based Anti Ad Fraud company, outlining the workings of a Ad Fraud botnet.

REFERENCING RESEARCH FINDINGS

It is idiotic to write a story based on a marketing report. It is just as dumb to reference to an argument that is made in a marketing paper. The function of a marketing paper is to sell someone’s product or agenda. Marketing papers should never be treated with the same confidence research papers are treated. This is because of few very important reasons:

- research papers are generally thoroughly reviewed by peers

- researchers tend to have a broader base of incentives driving their work

- research papers tend to use far more careful language

- claims made in research papers are backed up by objective evidence

When it comes to referencing research findings, let’s keep in mind that one commercially incentivised paper is not enough to establish a credible baseline for further assumptions to be made. On the contrary, it is inviting researchers to prove the initial claims wrong. In my experience, that would mean showing that the numbers are much higher, and that there is a gap between what these vendors are saying and what is really happening.

As an example of what-if, we have cio.com parroting word to word claims made in a marketing paper by a company with no previous track record in reporting security research findings.

This gap seems to be the dilemma of commercially focused research, as a company can focus only on so many things. For some reason the media and many other industry stakeholders seeem to have assumed results from the previous Whiteops paper as “true”, even though the paper presents results that are limited and in conflict with results from other researchers. It is concerning to say the least, to see how easy it is to get the press to repeat subjective findings as true.